Publications and Presentations

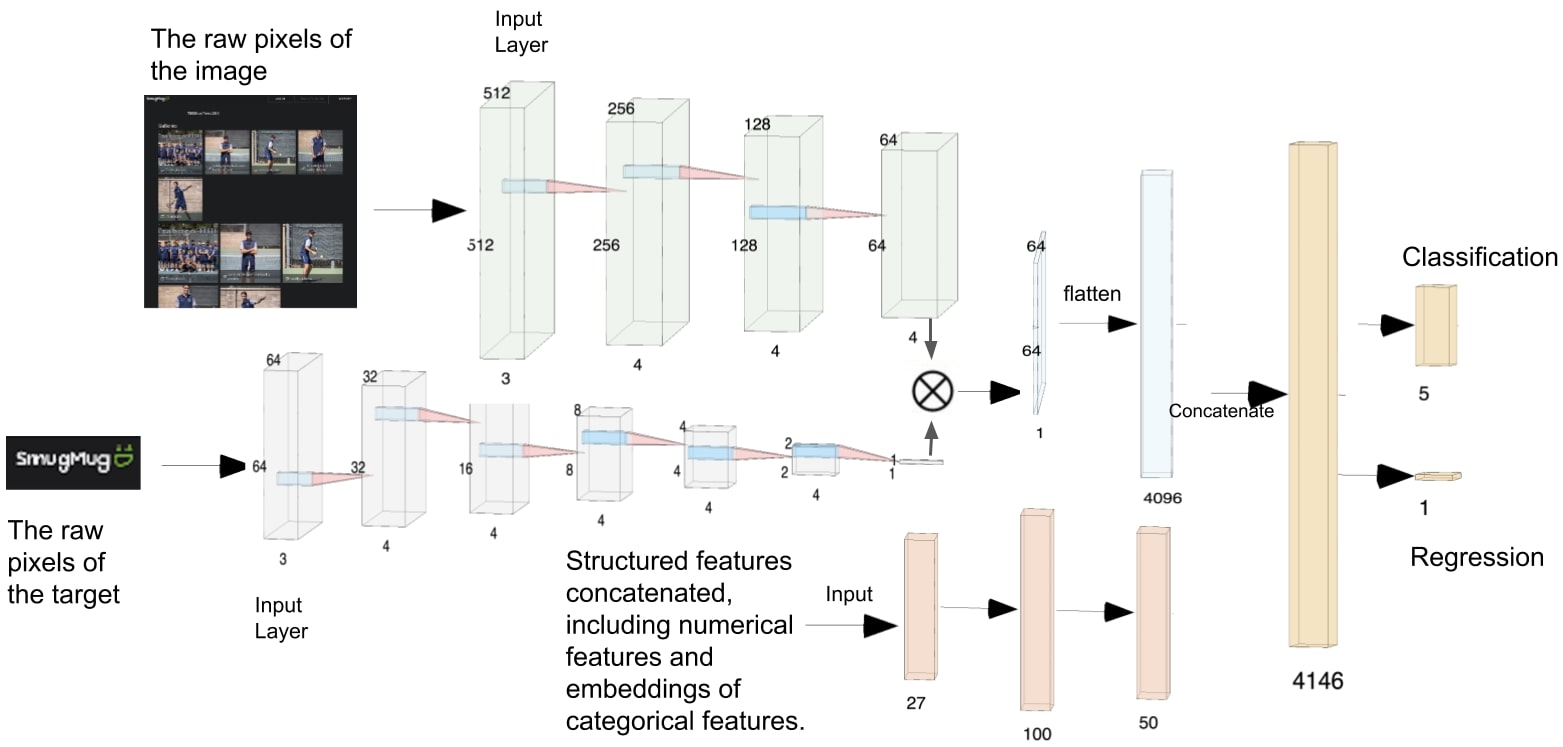

Arianna Yuan, Yang Li (2020). Modeling Human Visual Search Performance on Realistic Webpages Using Analytical and Deep Learning Methods. In Proceedings of the ACM CHI Conference on Human Factors in Computing Systems (CHI 2020). [PDF] [Video]

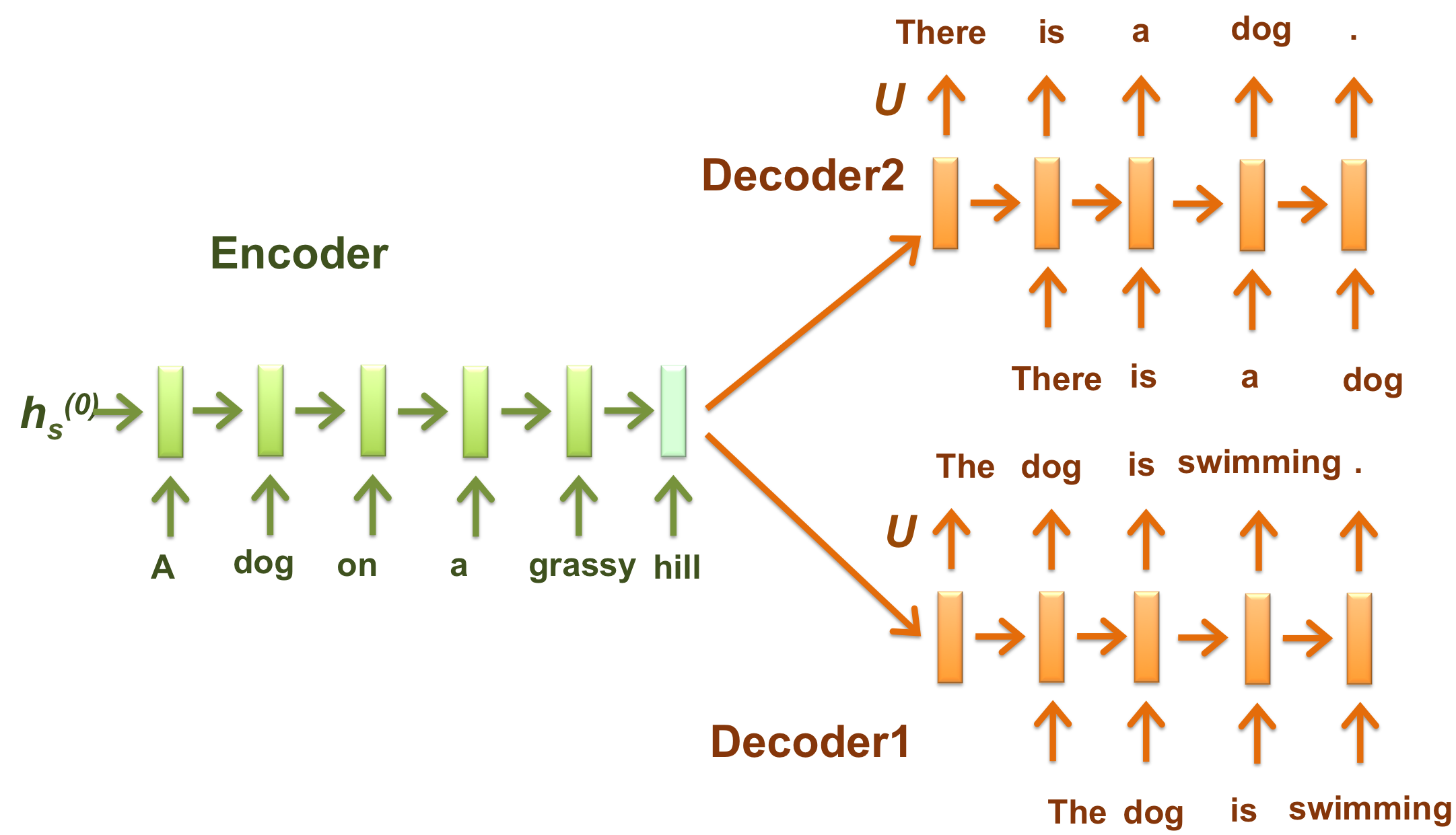

Xiaoya Li, Yuxian Meng, Arianna Yuan, Fei Wu, Jiwei Li (2020). LAVA NAT: A Non-Autoregressive Translation Model with Look-Around Decoding and Vocabulary Attention. arXiv preprint: arXiv:2002.03084. [PDF]

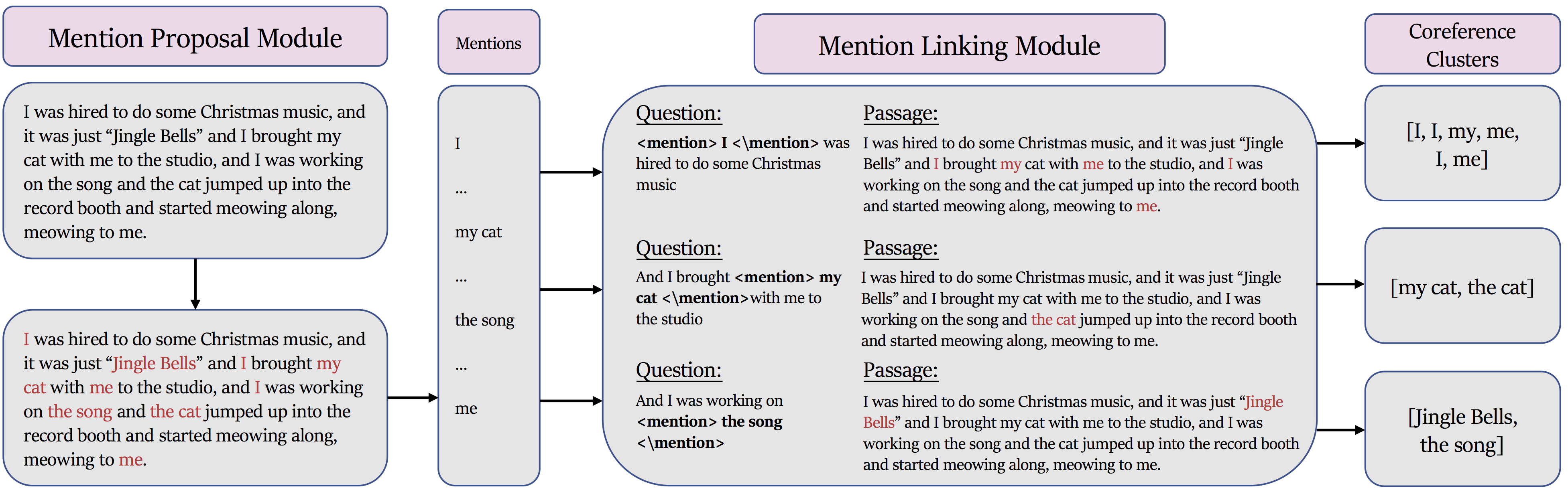

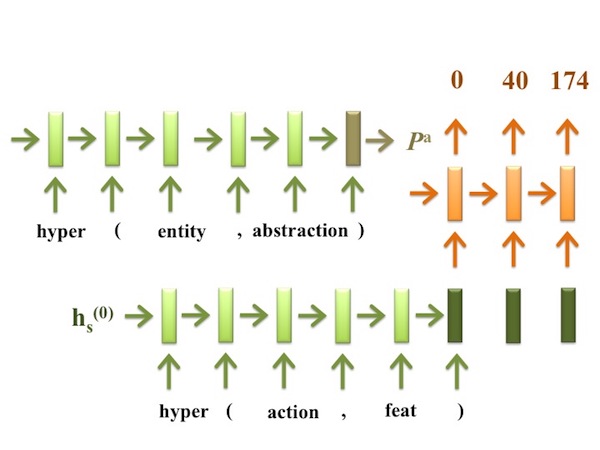

Wei Wu, Fei Wang, Arianna Yuan, Fei Wu, Jiwei Li (2020). Coreference Resolution as Query-based Span Prediction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL 2020). [PDF]

Yuxian Meng, Xiangyuan Ren, Zijun Sun, Xiaoya Li, Arianna Yuan, Fei Wu, Jiwei Li (2019). Large-scale Pretraining for Neural Machine Translation with Tens of Billions of Sentence Pairs. arXiv preprint: arXiv:1909.11861. [PDF]

Arianna Yuan, Jay McClelland (2019). Modeling Number Sense Acquisition in A Number Board Game by Coordinating Verbal, Visual, and Grounded Action Components. In Proceedings of the 41th Annual Meeting of the Cognitive Science Society. [PDF]

Sizhu Cheng*, Arianna Yuan* (2019). Understanding the Learning Effect of Approximate Arithmetic Training: What was Actually Learned? In Proceedings of the 17th Annual Meeting of the International Conference on Cognitive Modeling. [PDF]

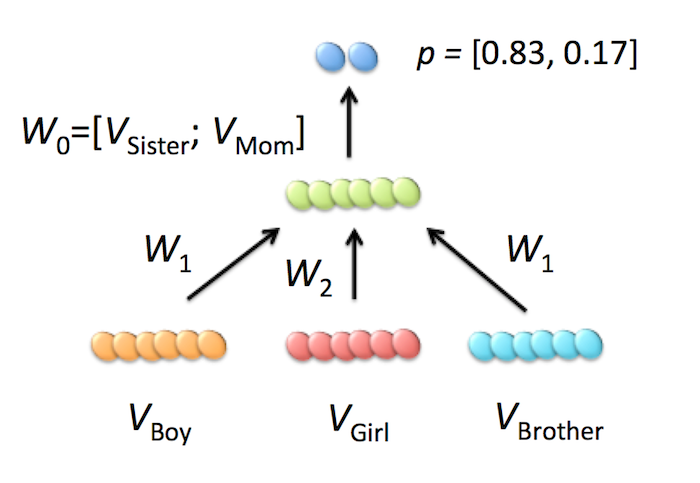

Yuxian Meng, Xiaoya Li, Xiaofei Sun, Qinghong Han, Arianna Yuan, Jiwei Li. Is Word Segmentation Necessary for Deep Learning of Chinese Representations? In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL 2019). [PDF]

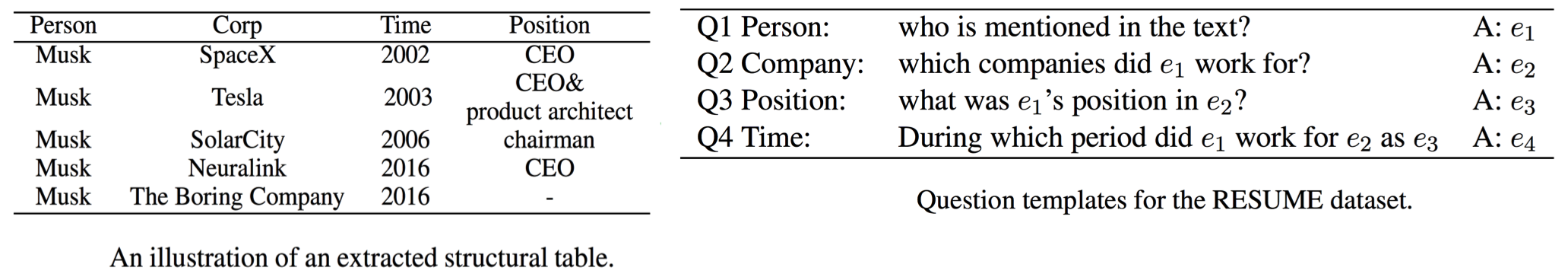

Xiaoya Li, Fan Yin, Zijun Sun, Xiayu Li, Arianna Yuan, Duo Chai, Mingxin Zhou, Jiwei Li. Entity- Relation Extraction as Multi-turn Question Answering. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL 2019). [PDF]

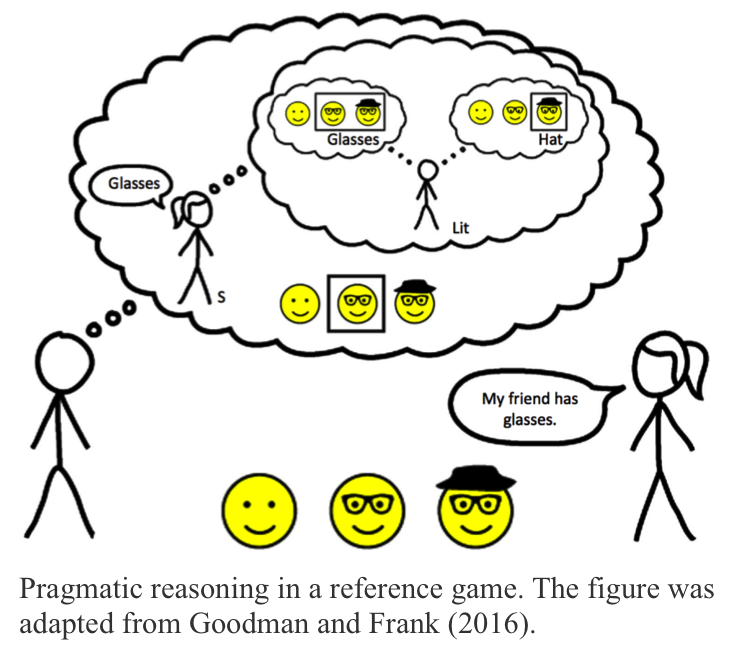

Arianna Yuan, Will Monroe, Yu Bai and Nate Kushman (2018). Understanding the Rational Speech Act Model. In Proceedings of the 40th Annual Meeting of the Cognitive Science Society. [PDF]

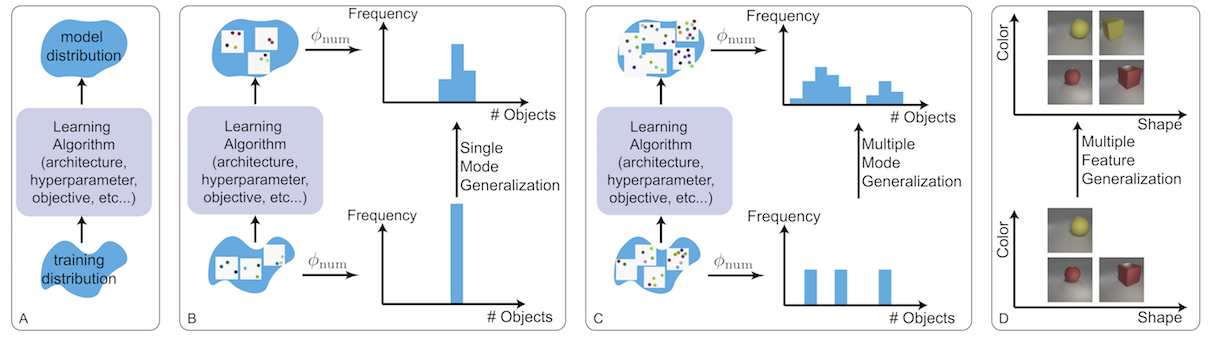

Shengjia Zhao, Hongyu Ren, Arianna Yuan, Jiaming Song, Noah Goodman, Stefano Ermon (2018). Bias and Generalization in Deep Generative Models: An Empirical Study. In Proceedings of the Thirty-Second Annual Conference on Neural Information Processing Systems (NIPS 2018). [PDF]

Arianna Yuan (2017). “So what should I do next?” – Learning to Reason through Self-Talk (in prep). [PDF]

Arianna Yuan (2017). Domain-General Learning of Neural Network Models to Solve Analogy Tasks – A Large-Scale Simulation. In Proceedings of the 39th Annual Meeting of the Cognitive Science Society. [PDF]

Arianna Yuan and Michael Henry Tessler (2017). Generating Random Sequences For You: Modeling Subjective Randomness in Competitive Games. In Proceedings of the 15th Annual Meeting of the International Conference on Cognitive Modeling. [PDF]

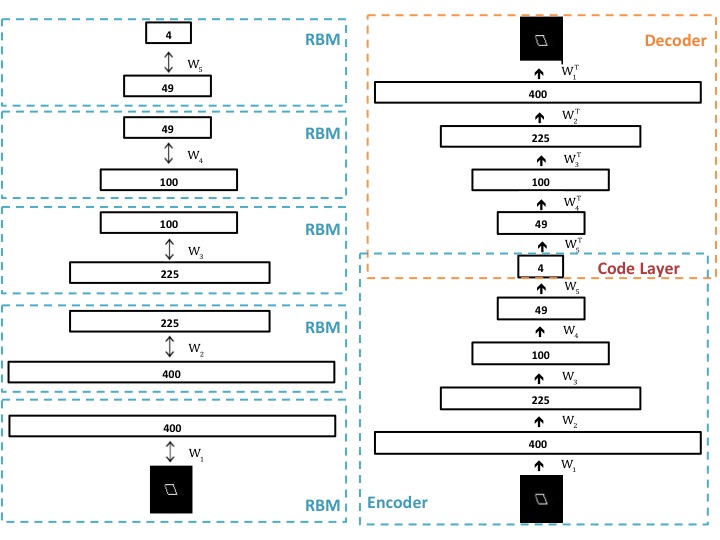

Arianna Yuan, Te-Lin Wu, James L. McClelland (2016). Emergence of Euclidean geometrical intuitions in hierarchical generative models. Presented at the 38th Annual Meeting of the Cognitive Science Society, Philadelphia, PA. [PDF]

Arianna Yuan (2014). A Computational Investigation of the Optimal Task Difficulty in Perceptual Learning. Presented at the 44th Annual Meeting of the Society for Neuroscience, Washington, DC.

Arianna Yuan (2012). Affective Priming Effects of Mean Facial Expressions. Presented at the 42nd Annual Meeting of the Society for Neuroscience, New Orleans, LA.

Arianna Yuan and Jay, McClelland. The Representation of Negative Numbers (in prep).

Sun, Y., and Arianna Yuan. Applications of Emotion Models Based on HMM in Mental Health Forecast. Journal of Tianjin University (Social Sciences), 13(6): 531-536, 2011.